AI in social media marketing raises ethical concerns for agencies seeking efficiency and ROI. These concerns range from data privacy to algorithmic bias and content manipulation. Understanding these issues is the first step in responsibly implementing AI technologies.

Ethical Risks of AI

1. Data privacy

Data is the fuel that powers AI algorithms, but not all data collection is ethically justifiable. When agencies deploy AI tools for analytics, they often collect a range of data from user behaviors to personal preferences.

Collecting data raises a critical question: What kind of data is ethical to collect? Agencies need to rigorously assess the need and purpose of each data point gathered to mitigate ethical risks.

Do users understand the extent to which their information is being used if we collect data? Obtaining explicit, informed consent is crucial.

Consider providing clear privacy policies and opt-in mechanisms that allow users to control their data.

Agencies cannot afford to be uninformed about data protection laws, such as GDPR or CCPA. These laws have strict guidelines on data collection, usage, and storage. Agencies must make sure they are in compliance to prevent both ethical lapses and legal consequences.

Algorithmic bias

Algorithms can inherit biases present in society, leading to unfair or discriminatory outcomes.

Biases can be introduced into AI algorithms by:

- Skewed training data

- Subjective human labeling

- In the design phase of the algorithm

An algorithmically biased social media ad campaign might perpetuate harmful stereotypes or fail to reach diverse audiences, potentially causing damage to your agency’s reputation. Agencies need to be cautious when they use AI tools by scrutinizing the data sources for potential bias.

Content manipulation

The same AI technologies that make content creation easier can also be used for nefarious purposes.

For example, AI technology to create hyper-realistic fake content (often called deepfakes) is advancing rapidly. They are mostly used online for entertainment purposes. (One NSFW example is placing Jerry Seinfeld in an iconic scene from the movie “Pulp Fiction.”)

However, there are also deepfakes used by criminals for much more sinister purposes.

For example, during the early stages of Russia’s invasion of Ukraine, a hacked Ukrainian news station played a deepfake of the Ukrainian president Volodymyr Zelensky telling his troops to “lay down arms and return to their families.” The video also surfaced on social media, although the social platforms quickly removed it from circulation.

The impact of this video is unknown. At the very least, the deepfake contributed to the ongoing misinformation being spread across Ukraine at that time. This poses a serious concern for not only agencies, but for society as a whole. Manipulated content can easily be used to spread harmful misinformation online.

Surveillance and monitoring

Another ethical AI concern is its capability for extensive data collection to tip over into the realm of surveillance. A thin line between personalization and intrusion exists. Consumers are becoming increasingly aware of that line.

According to a survey by Pew Research Center, 79% of U.S. adults are concerned about how companies use their data.

While personalized ads can improve user experience, they also require intrusive data collection methods that closely track user behavior.

Well-defined boundaries for what constitutes acceptable monitoring need to be established. Exceeding these boundaries veers into unethical territory and potentially violates privacy norms and laws.

Being aware of these ethical AI issues is not just about avoiding legal repercussions. It’s about building a sustainable and responsible business. Agencies should strive for ethical integrity as they innovate, setting an industry standard that respects both individual privacy and societal norms.

Source: Created with Midjourney. ChatGPTwas used to write the prompt below. Professionals are gathered around a conference table. They are deeply engaged in ethical decision-making; with laptops and documents spread out before them –ar 16:9

Ethical Guidelines for Using AI

The adoption of ethical AI practices is not just a matter of legal compliance but also a competitive advantage and brand differentiator.

By acknowledging and addressing ethical AI concerns, agencies can protect themselves from:

- Reputational damage

- Legal repercussions

- Loss of consumer trust

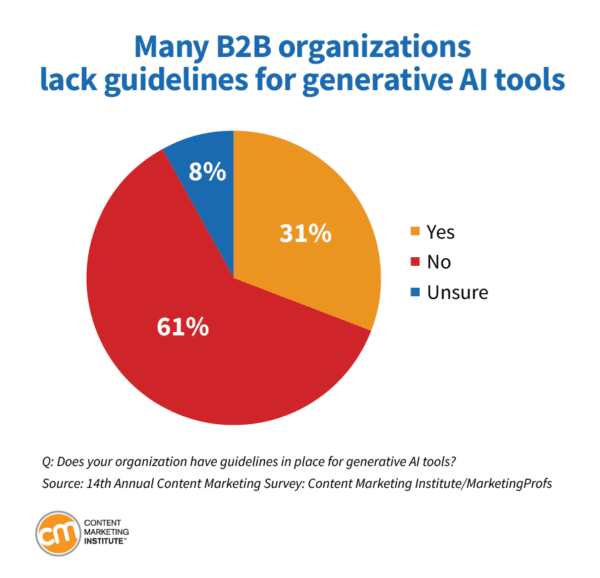

According to the 2024 B2B Content Marketing Report from the Content Marketing Institute, MarketingProfs, and Bright Spot, the biggest problem that marketers have is the lack of guidelines for generative AI tools:

Below are comprehensive guidelines agencies should consider to use AI ethically.

Transparency

Being transparent is essential for establishing and maintaining consumer and client trust.

Agencies can demonstrate transparency by:

- Clearly disclosing when and how AI is used in your marketing campaigns

- Ensuring privacy policies and terms of service are easily accessible on your website or other client-facing platforms

- Using clear language to explain AI processes and data usage to clients and consumers

Fairness and inclusivity

Ethical AI should aim for fairness. It needs to ensure that all people and communities are treated equally by algorithms.

Agencies should adopt proactive strategies to identify and eliminate bias, including:

- Auditing algorithms for potential biases

- Engaging in third-party audits for an unbiased evaluation

- Diversifying the dataset to include a broader range of perspectives

Adapt to consumer preferences

Consumers are becoming increasingly concerned with how companies gather and use their information. Protective behaviors are on the rise.

Many consumers want to regulate the information they share with companies and protect their data.

In fact, 87% of US online adults use at least one privacy- or security-protecting tool online.

So, recognizing the privacy inclinations of your consumer base is key. By tailoring your data strategy to these preferences, agencies can establish themselves as brands that respect user privacy.

Given that data privacy is one of the most significant concerns with AI, agencies must learn to prioritize consumer privacy over other concerns.

How to move to a privacy-first approach

- Gather essential data only

The focus shouldn’t be on acquiring as much data as possible but rather on obtaining data that adds value to customer experiences. Collect only the data that is necessary for the specific purpose. Recognize which data points are crucial for customer engagement and zero in on them. - Solicite data responsibly

After determining which data is indispensable, the next move is to develop straightforward and considerate ways to obtain this information. Allow users to opt-in rather than automatically collecting data. This approach is in harmony with legal frameworks like GDPR, CCPA, and PIPEDA. All those emphasize the importance of informed consent and data limitations. - Store data securely

Ensure robust security measures to protect stored data from breaches. Existing tools and frameworks can help agencies prioritize data security. Those include encryption methods, privacy impact assessments, and regular security audits.

Source: Image created using Midjourney with the following prompt: Data security symbol –ar 16:9

Corporate social responsibility

Ethical AI practices should align with broader corporate social responsibility goals to contribute positively to society. This involves treating ethical AI not as a standalone issue but one intrinsically tied to broader ethical practices and corporate values.

For example, adopting ethical AI can become a part of the agency’s value proposition. By setting a high ethical standard, agencies not only protect themselves but also create a positive brand image, thus attracting clients and consumers who prioritize ethical considerations.

By adopting these ethical guidelines, agencies can implement AI in their social media marketing strategies with a clearer understanding of the ethical landscape. The result is a more responsible use of technology. That benefits agencies, clients, and consumers.

Regulatory Considerations of AI

In regards to the ethical implementation of AI in social media marketing, regulatory compliance is non-negotiable.

Understanding and adhering to these data protection laws not only helps in avoiding legal troubles but also reinforces an agency’s commitment to ethical practices. Let’s take a detailed look at some key regulatory frameworks that govern data protection around the world.

General Data Protection Regulation (GDPR)

Originating in the European Union, GDPR has set the global standard for data protection and privacy.

Scope and jurisdiction: GDPR is not limited to companies based in the EU. Any agency dealing with data of EU citizens must comply.

Key provisions:

- Data subject rights: Includes the right to access, rectify, and erase personal data.

- Lawful processing: Data can only be processed under lawful conditions such as consent.

- Data minimization: Collection of data should be limited to what is necessary for the intended purpose.

What it means for agencies: Failure to comply with GDPR can result in hefty fines, up to €20 million or 4% of the company’s annual global turnover, whichever is higher. Non-compliance also could negatively impact the reputation of the agency.

California Consumer Privacy Act (CCPA)

While not as expansive as GDPR, the CCPA has significant implications for agencies and businesses that are operating in or targeting California residents.

Key provisions:

- Right to know: Consumers have the right to know what personal information is collected, used, shared, or sold.

- Opt-out: Consumers can opt out of the sale of their personal information.

In November of 2020, California voters approved Proposition 24, the CPRA, which amended the CCPA and added new additional privacy protections that began on January 1, 2023. As of January 1, 2023, consumers have new rights in addition to those above, such as:

- The right to correct inaccurate personal information that a business has about them; and

- The right to limit the use and disclosure of sensitive personal information collected about them.

What it means for agencies: The rights in the CCPA only apply to those that are California residents. However, it is good to be aware of if you are operating in that area, as non-compliance can still lead to massive penalties. Just ask Sephora, who earlier this year was hit with a $1.2 million dollar fine for not complying with the CCPA.

Personal Information Protection and Electronic Documents Act (PIPEDA)

This Canadian federal law governs how private sector organizations collect, use, and disclose personal information in the course of commercial activities.

Key provisions:

- Consent: Explicit consent is required for collecting, using, or disclosing personal information.

- Limited Collection: Only the information necessary for the identified purposes can be collected.

- Accountability: Organizations must take responsibility for the information they collect and how it is used, stored, and disclosed.

What it means for agencies: Agencies must evaluate their data collection and processing practices to ensure they align with PIPEDA requirements. Failure to comply can result in complaints to the Privacy Commissioner of Canada, and in some cases, legal action and fines.

Being aware of and compliant with PIPEDA is crucial for agencies with any commercial activities linked to Canada. Like GDPR and CCPA, PIPEDA compliance not only helps avoid legal issues but also contributes to building a brand that is committed to ethical data practices.

Other regulatory frameworks

Other jurisdictions are developing their data protection laws. Notable ones include:

- Personal Data Protection Act (PDPA) in Singapore

- Data Protection Act in the United Kingdom

- Lei Geral de Proteção de Dados (LGPD) in Brazil

Stay Updated on Legislative Changes for Ethical AI Use

Privacy regulations are constantly changing. Agencies need to be proactive and stay updated on changes that may impact them.

Agencies should regularly monitor legal updates related to AI and data privacy both domestically and internationally. Also, consult with legal experts specialized in data protection and AI ethics to ensure full compliance.

By complying with these regulatory considerations and using a privacy-first approach, agencies can better safeguard against legal complications and avoid fines for non-compliance. But more importantly, doing so will define your agency as an ethically responsible brand that is respected and trusted by its clients on social media.